Long time no see – I’m back from my first international vacation since 2019! I went back to Japan and Taiwan for the first time in over six years. It was so great to see friends I haven’t seen in many, many years, eat great food, and see how things have changed.

It also finally sunk in for me that I’ve really graduated from the PhD program, since in my last quarter there was quite a bit of chaos with student protests and labor strikes on campus. I’m free!

When asked how I feel about being PhDone, I often describe this meme of someone breaking free from chains amidst the sunset. The truth is that it wasn’t until I started my business Dr. Write-Aid Consulting that I realized just how limiting some things were in graduate school. While I worked for over 4 years in a writing center doing some of the same work I do now (writing consultations, workshops, etc.), there were restrictions on how we as graduate student staff could support students.

University Writing Consultations vs. Dr. Write-Aid Consulting

For example, in the university writing center, we as graduate writing consultants are not allowed to read students’ work before an appointment. We cannot provide feedback on anything in advance, and any feedback that we do provide is limited to a maximum of two 60-minute consultations per week. The policy of not providing any feedback in advance can be limiting because most people learn how to write in that manner, and graduate students in particular need to be able to turn written feedback into concrete revision strategies to put into action. It is also true that this policy protects graduate student staff labor to some extent, so I can’t say the policy exists without good reason.

Although now I do some of the same work that I used to do as a student employee, I feel so much more free, creative, and flexible in my work of providing consultations. I work outside of limitations that I wasn’t even aware of as a graduate student. It’s important for me to provide flexible and custom support for my clients, because now I truly know just how limiting the support can be within a university institution.

Policy

Of course, working without some restrictions doesn’t mean I work without any restrictions. Part of my policy is about artificial intelligence (AI) technology, including generative AI tools such as ChatGPT. Many, many people I talk with ask me about ChatGPT – whether AI steals my customers, destroys my business, and so on. The truth is that we have different audiences.

In my experience, most people who are interested in my services are also interested in using AI to refine their writing in some way, whether that’s using Grammarly or ChatGPT. However, the people who actually purchase my services and become my clients are not wholly or even partially satisfied in using AI to support their thinking and intellectual work.

For Dr. Write-Aid Consulting, I do not write any written text for clients, I do not use generative AI (including ChatGPT) for any of my services, I ask my clients to refrain from using generative AI tools on relevant projects during our working relationship, and if I suspect of any use, I may intervene immediately as I see fit.

The reason is that I see little consistency in how universities and other institutions regulate the use of generative AI in works such as dissertations, academic articles, and classroom assignments. I also work in instructional design, and once I saw an instructor list “AI literacy” in their course learning objectives. When I asked the instructor how they intend to teach students to use AI tools ethically and sustainably to support their intellectual work and writing as students, the instructor said they didn’t know yet. The main consensus I see among faculty and instructors is that AI is changing the educational landscape, and everyone is trying to keep up on the policy side.

Research

If instructors and faculty have little consensus on how they teach students in the age of generative AI, what am I supposed to do when their students come to me for help on their writing? I find it hard to foresee how liabilities and complications related to the use of generative AI may develop in the future. So I do not use it, and I ask my clients to refrain from using it on relevant projects during our working relationship.

Policy is different from personal perspectives, however. While I personally don’t use ChatGPT at all, I find it interesting that many people do use it, and for a variety of situations. I’ve met many graduate students, faculty, undergraduate students, and professionals who use ChatGPT for everything from emails to dissertations to publications. My main curiosity is how does ChatGPT affect the learning experience and process of writing, especially in situations where you cannot rely on these tools to communicate effectively with others?

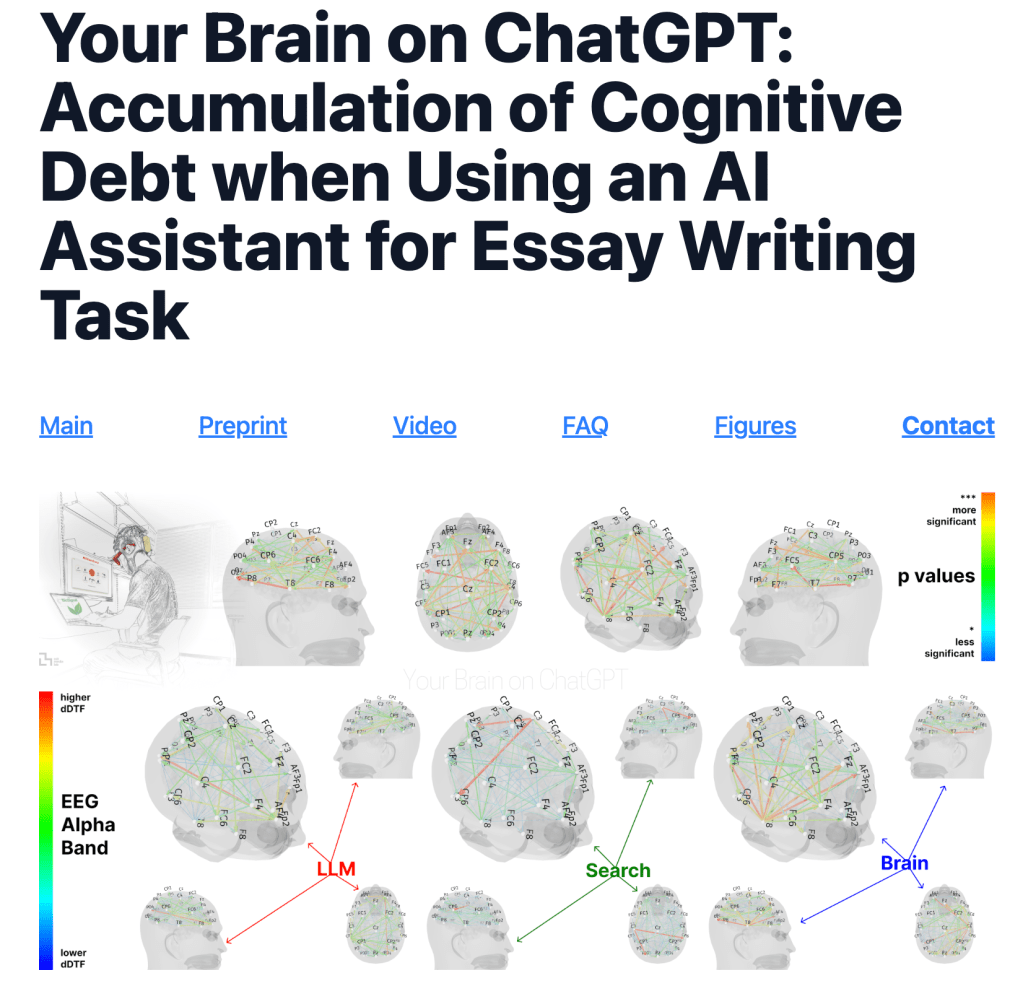

There’s a relevant 2025 study from MIT about how using ChatGPT affects brain activity. Reported in TIME magazine, the MIT researchers discuss how participants who used ChatGPT to write SAT essays showed “significantly different” brain activity patterns than participants who only used a search engine and participants who used only their brain. Specifically, participants who used ChatGPT showed the “weakest” activity, while participants who used only their brain showed the “strongest, widest-ranging networks” of brain activity to generate ideas, formulate language, and search their memory.

Most interestingly, the researchers found that the brain-only participants felt the highest levels of ownership and satisfaction of their work, compared to their peers who used ChatGPT and a search engine. Hard evidence from these extensive experiments show us nothing feels as good as using only our brains to communicate our thoughts to each other. However, my question then becomes about the significance of generative AI tools. Does it matter if people use ChatGPT in writing their own work? Why or why not?

Synchro U and I, and AI

In thinking more broadly about AI, I’m reminded of the Korean variety TV show Synchro U. On the show, famous singers perform song covers, and the hosts and audience must figure out whether that song is performed by AI or the real singer who might show up behind a revolving door.

Does it matter if the voice of the song is from a machine or ONEW (of SHINee) or Jay Park standing behind the door? It matters to the show hosts, the audience, and particularly to fans of these singers to see behind the revolving door, in the flesh. It matters to some people in some situations, just as it wouldn’t matter to others or in other situations.

To me, it’s not very interesting to read or produce AI writing because the complexity and process behind it is nothing comparable to the struggles, triumphs, tangents, and complex ideas that people go through in producing their own writing without generative AI. People have stories about their labor, their experiences, their craft, and their own philosophies about how they write or draw or sing. These stories, processes, and experiences behind craft are largely flattened by generative AI that produce output mechanically.

How did someone come up with the idea to write about the topic? How did they become interested in writing the piece? What was difficult or easy about writing it? What are some writing strategies that worked well for them? How did the writer push through obstacles to finish the piece? How did the writer learn to write this piece? How did the writer learn to develop their voice in this way? These questions become far more interesting when I ask them of people who don’t use generative AI in their work.

I would like to believe that stories matter, and that the processes and mechanisms of craft matters. These reasons are a huge part of why I love doing writing consultations. I love listening to the stories that people have and it’s really rewarding to understand the struggles and triumphs behind writing, so that I can support writers to be more confident, more efficient, and more successful in their work. Writing is a craft, and I’m interested in the process of it.

This post has become a long story about many things, and technology is developing so rapidly it is hard to keep up and make adjustments to policy or personal perspectives. I imagine I’ll continue to think about generative AI. I would be curious to know if others have thoughts to add – please feel free to let me know in the comments.

For my next blog post, I’m writing a roundup of research and productivity tools. I used to have a long list written up on my old personal website, and I figured it’s about time I updated and compiled everything. Please let me know if you have any recommendations for research software, citations, or productivity apps!

Thanks for reading!

Leave a comment